Parallel software will clearly benefit from validation involving deadlock detection (such as the Linux kernel's lockdep facility), other static and dynamic checks for proper use of synchronization primtives, and lots of stress testing. After all, the purpose of Q/A is to reduce the probability of the system failing for the user. A good way of reducing this probability is to conduct validation that greatly increases the probability of such failure, preferably while the developers still have the offending code fresh in their minds.

For example, if your software must handle CPU hotplug, then your testing had better force it to handle a great many CPU-hotplug events. If it passes a few thousand random CPU-hotplug events, there is a good chance that it will survive many years of CPU hotplugging in normal use. Of course, user-mode applications are normally unaware of CPU-hotplug events, but they often must cope with network outages, bogus user input, memory sticks coming and going, initialization, shutdown, and much else besides. Do your users a favor and thoroughly torture your software right from the get-go.

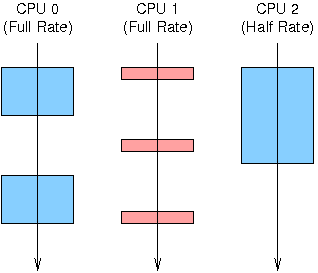

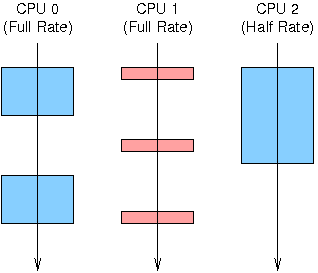

A little-known way to make certain types of races more likely to occur is to run CPUs at different frequencies. To see this, consider the following diagram:

Here CPU 1 is running a periodic task, indicated by the pink rectangles, while CPU 0 is concurrently running a task (indicated by the blue rectangles). Assume that there is a bug (AKA "race condition") that will cause a failure if two consecutive instances of CPU 1's task overlap a single instance of CPU 0's task. If both CPUs are running at full rate, this overlap will not happen unless CPU 0's task is delayed by interrupts, preemption, memory errors, or some other relatively low-probability event. In contrast, suppose that CPU 2 runs the blue task. Because CPU 2 at half the clock frequency, overlap is guaranteed (at least unless CPU 1 is delayed).

Of course, this example is pure supposition. However, I have seen this effect in real life. In fact, at Sequent, we often ran tests on systems with CPUs running at different clock rates in order to flush out this sort of race condition. Now that modern multicore computer systems are starting to permit CPUs running at different clock rates, you would be well-advised to do the same for your shared-memory parallel software.

In general, the goal of testing and other forms of quality assurance is to reduce the probability that end users will encounter bugs. One very effective way to achieve this goal is to structure your testing so as to greatly increase the probability of encountering bugs. Running different CPUs at different clock rates is but one way of increasing this probability.